The Future of Technology Innovation

The exploration of Google reveals its significant potential to reshape how we approach technology and development. As we’ve examined throughout this analysis, the technical capabilities and practical applications present compelling opportunities for innovation and growth.

For developers and technology professionals, staying informed about Google is essential for making strategic decisions and maintaining competitive advantage. The implications extend beyond immediate applications, potentially influencing broader industry trends and development practices.

Looking ahead, Google represents an important piece of the evolving technology landscape, warranting continued attention and exploration as new developments emerge.

In the rapidly evolving world of technology, this technology has emerged as a significant development that demands attention from developers and tech professionals alike. This comprehensive analysis explores the key aspects, technical implications, and practical applications that make this topic particularly relevant in today’s digital landscape.

As the technology industry continues to push boundaries and redefine possibilities, understanding this technology becomes crucial for staying ahead of the curve. This post delves into the technical details, examines real-world applications, and provides insights that can help you leverage this technology effectively.

Technical Implementation and Architecture

Understanding Google requires examining both its technical foundations and practical applications. The technology demonstrates several key characteristics that distinguish it from traditional approaches and make it particularly valuable for modern development environments.

From a technical perspective, Google offers capabilities that address common challenges faced by developers and organizations. These features enable more efficient workflows, improved performance, and enhanced scalability in various implementation scenarios.

The practical implications of Google extend across multiple domains, influencing how teams approach problem-solving and solution architecture. Real-world applications demonstrate the technology’s versatility and potential for addressing complex requirements in production environments.

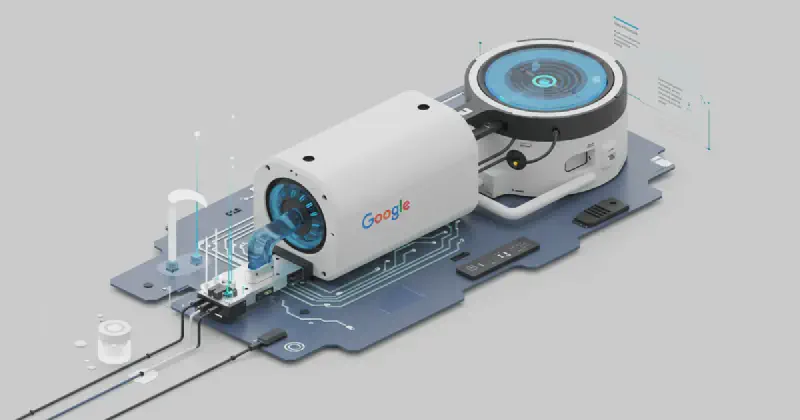

Beyond the Fan: How Google Chills Its AI Titans

As the AI arms race intensifies, the silent bottleneck isn’t just silicon or software—it’s thermodynamics. Google’s Tensor Processing Units (TPUs), the custom-built accelerators powering its AI empire, generate a colossal amount of heat. At their Hot Chips 2025 presentation, Google pulled back the curtain on their solution, revealing a strategic shift away from air and toward a far more potent medium: water. The core reason is simple physics: water has a thermal conductivity nearly 4000 times that of air, making it the only practical way to cool the dense, high-power TPU pods that train next-generation models.

Technical Implementation: A Direct-to-Chip Approach

Google’s strategy moves beyond simply making the data center colder. Instead of using massive, energy-hungry Computer Room Air Handler (CRAH) units to chill entire rooms, they are bringing the cooling directly to the source of the heat. This is a form of Direct-to-Chip (D2C) liquid cooling, a sophisticated closed-loop system integrated at the rack and server level.

Here’s how it works:

- Cold Plates: Custom-engineered copper or aluminum cold plates are mounted directly on top of the TPU processors. These plates contain micro-channels through which a coolant (treated water or a water-glycol mixture) flows, absorbing heat directly from the chip’s integrated heat spreader.

- Coolant Distribution Units (CDUs): Each rack, or a set of racks, is serviced by a CDU. This unit acts as the heart of the local loop, pumping cooled liquid to the server manifolds and receiving the hot liquid returning from the cold plates.

- Heat Exchange: The CDU then interfaces with the larger facility water loop. The heat captured from the TPUs is transferred from the local loop to the facility water, which is then pumped to external cooling towers or chillers to dissipate the heat into the atmosphere. This multi-stage process efficiently moves thermal energy out of the building.

This architecture allows Google to deploy racks with power densities exceeding 100 kW, a figure that is simply unattainable with traditional air cooling.

Performance and Real-World Impact

The performance gains from this liquid-cooled strategy are multifaceted:

- Higher Power Density: The primary benefit is the ability to cool chips with a much higher Thermal Design Power (TDP). This allows Google to pack more powerful TPUs into a single server and more servers into a single rack, which is critical for the massive, interconnected TPU Pods used to train models like Gemini.

- Improved Efficiency (PUE): By eliminating the need for a significant portion of the fans inside servers and data halls, Google drastically reduces its energy overhead. This leads to a much lower Power Usage Effectiveness (PUE), a key metric for data center efficiency. A PUE closer to 1.0 means more energy is used for computation and less is wasted on cooling.

- Stable Performance: Liquid cooling provides more stable and lower operating temperatures for the TPUs. This thermal consistency reduces the risk of thermal throttling, allowing the chips to maintain peak performance for longer durations and potentially extending their operational lifespan.

Comparison with Alternatives

| Cooling Method | Pros | Cons | Google’s Use Case |

|---|---|---|---|

| Traditional Air Cooling | Simple, well-understood, lower initial cost. | Low thermal capacity, inefficient at high density, noisy. | Inadequate for high-density TPU pods; relegated to lower-power infrastructure. |

| Direct-to-Chip Liquid | Excellent thermal performance, high efficiency (PUE), enables extreme density. | Higher complexity, potential for leaks, requires facility plumbing. | The chosen “sweet spot” for balancing extreme performance with manageable infrastructure. |

| Two-Phase Immersion | Highest possible cooling potential, completely silent operation. | Extremely complex, uses expensive dielectric fluids, difficult server maintenance. | Currently too operationally complex for Google’s hyperscale needs, but a potential future path. |

Ultimately, Google’s adoption of direct-to-chip liquid cooling isn’t just an engineering choice; it’s a strategic necessity. It is the foundational technology that enables them to build and operate the warehouse-scale computers required to stay at the bleeding edge of artificial intelligence.

The Ripple Effect: How Liquid Cooling Changes the Game

Google’s shift to liquid cooling for its TPUs isn’t just an internal engineering feat; it’s a paradigm shift with significant practical implications for developers, the tech industry, and the future of computation itself. While you might not be installing a liquid cooling loop in your own server rack tomorrow, the effects of this move will ripple through the cloud services you use and the applications you build.

Impact on Developers and the Tech Industry

For developers and ML engineers, the primary benefit is access to more powerful and consistent compute resources. Air-cooled systems often face the challenge of thermal throttling, where a chip’s performance is automatically reduced to prevent overheating. Liquid cooling largely eliminates this bottleneck.

- Unlocking Peak Performance: Developers using Google Cloud can now run larger, more complex AI models for longer durations without performance degradation. This means faster training times and the ability to tackle problems that were previously computationally prohibitive.

- Setting a New Industry Standard: Google’s success puts pressure on other cloud providers and hardware manufacturers. The industry is now forced to view advanced cooling not as a niche for overclockers, but as a core requirement for high-density AI and HPC infrastructure. This accelerates innovation in datacenter design and thermal management across the board.

Use Cases and Applications Unleashed

The ability to pack more computational power into a smaller, more efficient footprint opens the door for a new wave of applications.

- Massive-Scale AI Training: Training foundational models like large language models (LLMs) or complex climate simulation models requires sustained, petaflop-scale computing. Liquid-cooled TPUs provide the stable, high-power environment necessary for this, accelerating research in fields from drug discovery with AlphaFold to generative art.

- High-Frequency Inference: For applications requiring real-time responses, such as autonomous driving perception systems or live language translation, inference speed is critical. Densely packed, liquid-cooled hardware can handle a higher volume of requests with lower latency in a smaller physical and energy footprint.

- Scientific Computing (HPC): Fields like computational fluid dynamics, genomic sequencing, and financial modeling can leverage this enhanced hardware density to run more detailed simulations faster, leading to quicker scientific breakthroughs and more accurate market predictions.

Future Prospects and Actionable Insights

This move signals a clear trend: the era of air cooling for high-end AI accelerators is coming to an end. As chip power densities continue to increase, liquid cooling will become the default, not the exception.

Here are some actionable takeaways:

-

For Cloud Architects: When planning your infrastructure, consider the Total Cost of Ownership (TCO). While a VM instance on a liquid-cooled platform might seem equivalent on paper, the underlying stability and lack of thermal throttling can lead to more predictable performance and potentially lower costs for long-running, intensive jobs. Your architecture should account for this reliability.

-

For ML Engineers: Don’t be afraid to scale up. The hardware is becoming more capable. This is the time to experiment with larger model architectures, bigger batch sizes, and more complex data augmentation pipelines. The infrastructure is ready to handle it.

1 2 3 4 5 6 7# Conceptual: Your code doesn't change, but the underlying hardware # allows you to push these parameters further without throttling. model = build_massive_transformer_model(layers=96, attention_heads=64) training_args = TrainingArguments( per_device_train_batch_size=2048, # Larger batches become more feasible ... ) -

For the Industry: The focus on Green AI and sustainable computing is no longer just a talking point. Liquid cooling’s superior energy efficiency (lower PUE) is a critical component. As you evaluate technology partners, their commitment to sustainable and efficient infrastructure should be a key decision factor.